Longest palindromic subsequence

Not to be confused with Longest palindromic substring.

The longest palindromic subsequence (LPS) problem is the problem of finding the longest subsequence of a string (a subsequence is obtained by deleting some of the characters from a string without reordering the remaining characters) which is also a palindrome. In general, the longest palindromic subsequence is not unique. For example, the string alfalfa has four palindromic subsequences of length 5: alala, afafa, alfla, and aflfa. However, it does not have any palindromic subsequences longer than five characters. Therefore all four are considered longest palindromic subsequences of alfalfa.

Contents

Precise statement

Three variations of this problem may be distinguished:

- Find the maximum possible length for a palindromic subsequence.

- Find some palindromic subsequence of maximal length.

- Find all longest palindromic subsequences.

Theorem: Returning all longest palindromic subsequences cannot be accomplished in worst-case polynomial time.

Proof[1]: Consider a string made up of  ones, followed by

ones, followed by  zeroes, and finally

zeroes, and finally  ones. Any palindromic subsequence either does not contain any zeroes, in which case its length is only up to

ones. Any palindromic subsequence either does not contain any zeroes, in which case its length is only up to  , or it contains at least one zero. If it contains at least one zero, it must be of the form

, or it contains at least one zero. If it contains at least one zero, it must be of the form  , but

, but  and

and  must be equal. (This is because the middle of the palindrome must lie somewhere within the zeroes, otherwise there would be no zeroes on one side of it and at least one zero on the other side; but as long as the middle lies within the zeroes, there must be an equal number of ones on each side.) But

must be equal. (This is because the middle of the palindrome must lie somewhere within the zeroes, otherwise there would be no zeroes on one side of it and at least one zero on the other side; but as long as the middle lies within the zeroes, there must be an equal number of ones on each side.) But  can only be up to

can only be up to  , and likewise with

, and likewise with  , so again the palindrome cannot be longer than

, so again the palindrome cannot be longer than  characters. However, there are

characters. However, there are  palindromic subsequences of length

palindromic subsequences of length  ; we can either take all the ones, or we can take all

; we can either take all the ones, or we can take all  zeroes, all

zeroes, all  terminal ones, and

terminal ones, and  out of the

out of the  initial ones. Thus the output size is not polynomial in

initial ones. Thus the output size is not polynomial in  , and then neither can the algorithm be in the worst case.

, and then neither can the algorithm be in the worst case.

However, this does not rule out the existence of a polynomial-time algorithm for the first two variations on the problem. We now present such an algorithm.

Algorithm

LCS-based approach

The standard algorithm for computing a longest palindromic subsequence of a given string S involves first computing a longest common subsequence (LCS) of S and its reverse S'. Often, this gives a correct LPS right away. For example, the reader may verify that alala, afafa, aflfa and alfla are all LCSes of alfalfa and its reverse aflafla. However, this is not always the case; for example, afala and alafa are also LCSes of alfalfa and its reverse, yet neither is palindromic. While it is clear that any LPS of a string is an LCS of the string and its reverse, the converse is false.

However, with a slight modification, this algorithm can be made to work. We will use the example string ABCDEBCA, which has six LPSes: ABCBA, ABDBA, ABEBA, ACDCA, ACECA and ACBCA (and our objective is to find one of them). Suppose that we find an LCS which is not one of these, such as L = ABDCA:

- ABCDEBCA

- ACBEDCBA

Now consider the central character C = D of L. It splits S up into two parts, the part before and the part after: S1 = ABC and S2 = EBCA. We know that the first half of L (L1 = AB) is a subsequence of S1, and that the second half of L (L2 = CA) is a subsequence of S2. Notice that S' is also split up into two parts: S'1 = ACBE = (S2)' and S'2 = CBA = (S1)'. Since L is also a subsequence of S', we see that L1 is a subsequence of S'1, and L2 is a subsequence of S'2. But the fact that L1 is a subsequence of S'1 implies (by reversing both strings) that (L1)' = BA is a subsequence of S2 = EBCA. Since L1 is a subsequence of S1 and (L1)' is a subsequence of S2, we conclude that L1C(L1)' = ABDBA is a subsequence of S1CS2 = S. So we have obtained the desired result, a longest palindromic subsequence.

Extrapolating to the general case, we can always obtain a LPS by first taking the LCS of S and S' and then "reflecting" the first half of the result onto the second half; that is, if L has k characters, then we replace the last ⌊k/2⌋ characters of L by the reverse of the first ⌊k/2⌋ characters of L to obtain a palindromic subsequence of S. This is obviously a palindrome by the foregoing analysis; it is guaranteed to be a subsequence by the foregoing analysis; and it is guaranteed to be as long as possible because it is of the same length as any LCS, yet the length of an LCS is also the upper bound on the length of an LPS since every LPS must trivially be an LCS. The odd case is handled as above, and the even case very similarly (lacking only the central character C).

The complexity of this algorithm is the same as the complexity of the LCS algorithm used. The textbook dynamic programming algorithm for LCS runs in  time, so the time taken to find the LPS is

time, so the time taken to find the LPS is  , where

, where  is the length of S.

is the length of S.

Direct solution

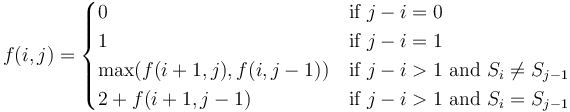

There is also a "direct"  solution, also based on dynamic programming; it is very similar in principle to the approach based on the textbook LCS algorithm. We define

solution, also based on dynamic programming; it is very similar in principle to the approach based on the textbook LCS algorithm. We define  to be the length of the longest palindromic subsequence of the substring S[i,j) (see half-open interval). Assuming indexing starting from zero, the objective is to compute

to be the length of the longest palindromic subsequence of the substring S[i,j) (see half-open interval). Assuming indexing starting from zero, the objective is to compute  .

.

This is easy when the substring is empty, or when it consists of only a single character; the value of  will be 0 or 1, respectively. Otherwise,

will be 0 or 1, respectively. Otherwise,

- when the substring's first and last characters are equal, add both of them to the longest palindromic subsequence of the characters in between to get a palindromic subsequence two characters longer;

- when they are unequal, then it's clearly not possible to use both of them to form a palindromic subsequence of the given substring, so the answer will be the same as if one of them were ignored.

Thus:

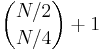

To see calculate the cost, consider that the worst case occurs when every invocation to  requires a call on two different substrings. This can happen for invocations with strings of length

requires a call on two different substrings. This can happen for invocations with strings of length  to

to  . So the total number of invocations is

. So the total number of invocations is  .

.

However, a lot of these invocations are repeats. If we store previously computed solutions, then the cost goes down. The above worst case happens when no matches are ever made, so this requires us to try out eliminating all strings that start at 0 and those that start at n-1, such that their combined length is  . This is just the number of ways to partition a given number into two non-negative integers, summed up over

. This is just the number of ways to partition a given number into two non-negative integers, summed up over  . The number of ways to partition

. The number of ways to partition  into two non-negative integers is

into two non-negative integers is  . Hence the overall cost is

. Hence the overall cost is  .

.

References

- ↑ Jonathan T. Schneider (2010). Personal communication.

External links

- IOI '00 - Palindrome

- SPOJ:

- Palindrome 2000 (a duplicate of the problem above)

- Aibohphobia