Difference between revisions of "Cartesian tree"

(→Left-to-right) |

(→Left-to-right) |

||

| (2 intermediate revisions by 2 users not shown) | |||

| Line 22: | Line 22: | ||

Thus, we begin by constructing a singleton tree containing only the first element <math>a_1</math> in the sequence; this is a valid Cartesian tree for the segment of the sequence containing only the first element. Then, we repeatedly examine the next element <math>a_{i+1}</math>. At each step, we keep track of <math>v_i</math>, that is, the node that has label <math>a_i</math> (the last value inserted): | Thus, we begin by constructing a singleton tree containing only the first element <math>a_1</math> in the sequence; this is a valid Cartesian tree for the segment of the sequence containing only the first element. Then, we repeatedly examine the next element <math>a_{i+1}</math>. At each step, we keep track of <math>v_i</math>, that is, the node that has label <math>a_i</math> (the last value inserted): | ||

| − | * If <math>a_{i+1} | + | * If <math>a_{i+1} > a_i</math>, then we just insert a new node <math>v_{i+1}</math> as the right child of <math>v_i</math>, and label this new node with <math>a_{i+1}</math>. |

* Otherwise, we consider node <math>P(v_i)</math>, the parent of <math>v_i</math>. If the label of <math>P(v_i)</math> is less than or equal to <math>a_{i+1}</math>, we stop. Otherwise, we look to ''its'' parent, <math>P(P(v_i))</math>, and see whether ''its'' label is less than or equal to <math>a_{i+1}</math> and so on. If we stop at node <math>u</math>, with right child <math>w</math>, then we insert the new node <math>v_{i+1}</math> so that it is the right child of <math>u</math>, and make <math>w</math> the left child of <math>v_{i+1}</math>. | * Otherwise, we consider node <math>P(v_i)</math>, the parent of <math>v_i</math>. If the label of <math>P(v_i)</math> is less than or equal to <math>a_{i+1}</math>, we stop. Otherwise, we look to ''its'' parent, <math>P(P(v_i))</math>, and see whether ''its'' label is less than or equal to <math>a_{i+1}</math> and so on. If we stop at node <math>u</math>, with right child <math>w</math>, then we insert the new node <math>v_{i+1}</math> so that it is the right child of <math>u</math>, and make <math>w</math> the left child of <math>v_{i+1}</math>. | ||

* If we trace all the way up to the root of the tree so far, and still find no node with label less than or equal to <math>a_{i+1}</math>, then <math>a_{i+1}</math> is the smallest element seen so far; so make the new node <math>v_{i+1}</math> the root of the tree, and make the old root <math>v_{i+1}</math>'s left child. | * If we trace all the way up to the root of the tree so far, and still find no node with label less than or equal to <math>a_{i+1}</math>, then <math>a_{i+1}</math> is the smallest element seen so far; so make the new node <math>v_{i+1}</math> the root of the tree, and make the old root <math>v_{i+1}</math>'s left child. | ||

| Line 46: | Line 46: | ||

left[i] ← root | left[i] ← root | ||

root ← i | root ← i | ||

| − | + | else if right[last] = 0 // just insert it | |

| − | if right[last] = 0 // just insert it | + | |

right[last] ← i | right[last] ← i | ||

parent[i] ← last | parent[i] ← last | ||

Latest revision as of 18:02, 13 June 2016

Given a sequence of numbers (or any totally ordered objects), there exists a binary min-heap whose inorder traversal is that sequence. This is known as the Cartesian tree for that sequence.

Contents

Construction[edit]

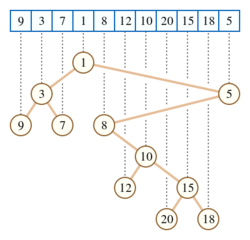

To see what such a tree would look like, imagine starting with the example sequence shown on the right. Because 1 is the smallest element in the sequence, it must occur at the root of the Cartesian tree (which is min-heap-ordered). Now, in an inorder traversal of the Cartesian tree, all the elements in the root's left subtree will be visited before the root, and all the elements in the right subtree after; so we can readily conclude that the elements 9, 3, and 7 occur in the left subtree, whereas 8, 12, 10, 20, 15, 18, and 5 occur in the right subtree.

To actually construct the left subtree, we apply this reasoning recursively; the left subtree of the root is itself min-heap-ordered, so its root must be the element 3, as it is the smallest of 9, 3, and 7. Likewise, the root of the right subtree must be 5, which is the smallest of all the elements to the right of the 1; and so on.

It is clear from this discussion that there always exists at least one valid Cartesian tree for any given sequence.

Uniqueness[edit]

Based on the technique given above to construct a Cartesian tree, and the underlying reasoning, we see that the Cartesian tree of a sequence of distinct numbers is always unique. If there are duplicates in the sequence, there may be multiple possible Cartesian trees; for example, the sequence [5, 5] has two possible Cartesian trees (one in which the root has a left child, and one in which it has a right child). On the other hand, [5, 2, 5] has only one possible Cartesian tree, even though it has duplicate elements. The precise criterion is that the Cartesian tree is unique if and only if every segment of the sequence has a unique minimum element.

We can guarantee uniqueness even with duplicates by insisting that if an element appears multiple times in a sequence then the occurrence furthest to the left is always considered to be the smallest, or by finding any other way to break ties.

Naive method[edit]

The naive method described above may take up to  time, since it requires scanning the sequence to find its minimum, then scanning the range to the left of the minimum, and scanning the range to the right, and so on down; this algorithm then has the same performance dynamics as quicksort (average

time, since it requires scanning the sequence to find its minimum, then scanning the range to the left of the minimum, and scanning the range to the right, and so on down; this algorithm then has the same performance dynamics as quicksort (average  ).

).

Left-to-right[edit]

The Cartesian tree may be constructed on one pass over the sequence. We proceed from left to right and add each value we encounter as a new node to the tree; after having processed the first  elements of the sequence, we will have a valid Cartesian tree for these initial elements. After we have processed the entire sequence (

elements of the sequence, we will have a valid Cartesian tree for these initial elements. After we have processed the entire sequence ( ), the Cartesian tree will be complete.

), the Cartesian tree will be complete.

Thus, we begin by constructing a singleton tree containing only the first element  in the sequence; this is a valid Cartesian tree for the segment of the sequence containing only the first element. Then, we repeatedly examine the next element

in the sequence; this is a valid Cartesian tree for the segment of the sequence containing only the first element. Then, we repeatedly examine the next element  . At each step, we keep track of

. At each step, we keep track of  , that is, the node that has label

, that is, the node that has label  (the last value inserted):

(the last value inserted):

- If

, then we just insert a new node

, then we just insert a new node  as the right child of

as the right child of  , and label this new node with

, and label this new node with  .

. - Otherwise, we consider node

, the parent of

, the parent of  . If the label of

. If the label of  is less than or equal to

is less than or equal to  , we stop. Otherwise, we look to its parent,

, we stop. Otherwise, we look to its parent,  , and see whether its label is less than or equal to

, and see whether its label is less than or equal to  and so on. If we stop at node

and so on. If we stop at node  , with right child

, with right child  , then we insert the new node

, then we insert the new node  so that it is the right child of

so that it is the right child of  , and make

, and make  the left child of

the left child of  .

. - If we trace all the way up to the root of the tree so far, and still find no node with label less than or equal to

, then

, then  is the smallest element seen so far; so make the new node

is the smallest element seen so far; so make the new node  the root of the tree, and make the old root

the root of the tree, and make the old root  's left child.

's left child.

Pseudocode[edit]

input sequence A with length N indexed from 1

// label the root node 1, with left child = right child = 0 (nonexistent)

root ← 1

left[1] ← 0

right[1] ← 0

// the root's label is the first item in the sequence

label[1] ← A[1]

for i ∈ [2..N]

last ← i-1 // node of A[i-1]

// create new node with label A[i]

label[i] ← A[i]

right[i] ← 0

while label[last] > A[i] and last ≠ root

last ← parent[last]

if label[last] > A[i] // A[i] is the smallest element yet; make it new root

parent[root] ← i

left[i] ← root

root ← i

else if right[last] = 0 // just insert it

right[last] ← i

parent[i] ← last

left[i] ← 0

else // reconfigure links

parent[right[last]] ← i

left[i] ← right[last]

right[last] ← i

parent[i] ← last

Proof of correctness[edit]

We define our invariant is as follows: After the loop in the above algorithm has run  times, we will have a valid Cartesian tree for the first

times, we will have a valid Cartesian tree for the first  elements of

elements of A.

This is true just before the loop runs for the first time ( ), because we will have a singleton tree that contains just the first element of

), because we will have a singleton tree that contains just the first element of  , and clearly this is a valid Cartesian tree for just that first element.

, and clearly this is a valid Cartesian tree for just that first element.

Now assume the loop has completed  iterations, but has not yet begun iteration

iterations, but has not yet begun iteration  . Then, we have a valid Cartesian tree of the first

. Then, we have a valid Cartesian tree of the first  elements of

elements of  , and we wish to insert

, and we wish to insert  . We first observe the following:

. We first observe the following:

- The last node inserted, which we will call

, has no right child. This is because

, has no right child. This is because  must come last in the inorder traversal of the tree so far, as that tree is a valid Cartesian tree of all the elements added so far; but if

must come last in the inorder traversal of the tree so far, as that tree is a valid Cartesian tree of all the elements added so far; but if  has a right child, then

has a right child, then ![\mathrm{right}[v]](/wiki/images/math/0/8/e/08eff45978879967de09cdb23a9f8e98.png) will follow

will follow  in the inorder traversal.

in the inorder traversal. -

is the right child of

is the right child of ![\mathrm{parent}[v]](/wiki/images/math/8/1/9/819ed018d1f995dbb584a19f6a0dd5e7.png) , if the latter exists.

, if the latter exists. ![\mathrm{parent}[v]](/wiki/images/math/8/1/9/819ed018d1f995dbb584a19f6a0dd5e7.png) is furthermore the right child of

is furthermore the right child of ![\mathrm{parent}[\mathrm{parent}[v]]](/wiki/images/math/5/e/3/5e3c1e50edceaa4555baa1e08416e5ad.png) , and so on all the way up to the root. If this were not the case, then

, and so on all the way up to the root. If this were not the case, then  would lie in the left subtree of some node

would lie in the left subtree of some node  , and therefore

, and therefore  would follow

would follow  in the inorder traversal, a contradiction.

in the inorder traversal, a contradiction.

Then consider the three cases covered in the code:

- If

is less than the label of the tree's root, then after we make it the new root (with the old root as its left child),

is less than the label of the tree's root, then after we make it the new root (with the old root as its left child),  will occur at the very end of the inorder traversal, since everything in the left subtree will be visited before it; but the order in which the previously inserted elements are visited will be unchanged. Also, since

will occur at the very end of the inorder traversal, since everything in the left subtree will be visited before it; but the order in which the previously inserted elements are visited will be unchanged. Also, since  is the smallest element in the tree, and it goes at the root, the tree maintains its min-heap-ordering property. Hence the invariant is preserved.

is the smallest element in the tree, and it goes at the root, the tree maintains its min-heap-ordering property. Hence the invariant is preserved. - If

, then after we have inserted a new node as the right child of

, then after we have inserted a new node as the right child of  , this node will follow

, this node will follow  in the inorder traversal, but the order in which all other elements are visited will be unchanged, so the effect is to append the new node to the end of the inorder traversal of the old tree. The new tree will also maintain its min=heap-ordering, since the new node inserted is greater than or equal to its parent. Therefore, the invariant is preserved.

in the inorder traversal, but the order in which all other elements are visited will be unchanged, so the effect is to append the new node to the end of the inorder traversal of the old tree. The new tree will also maintain its min=heap-ordering, since the new node inserted is greater than or equal to its parent. Therefore, the invariant is preserved. - If we find some proper ancestor

of the most recently inserted node, whose label is less than or equal to

of the most recently inserted node, whose label is less than or equal to  , but whose right child's label is greater than

, but whose right child's label is greater than  , then

, then  is visited immediately before all the elements in its right subtree, and those elements occur at the very end of the inorder traversal (since

is visited immediately before all the elements in its right subtree, and those elements occur at the very end of the inorder traversal (since  is descended from the root of the tree along only right edges); furthermore all the elements in

is descended from the root of the tree along only right edges); furthermore all the elements in  's right subtree are greater than or equal to the label of

's right subtree are greater than or equal to the label of ![\mathrm{right}[u]](/wiki/images/math/6/3/d/63df7051424163b65d2f44dcc5e4661e.png) . Now then, when we insert a new node as

. Now then, when we insert a new node as  's right child, and make the original right child of

's right child, and make the original right child of  the left child of the new root, we see that all the elements formerly in

the left child of the new root, we see that all the elements formerly in  's right subtree remain in

's right subtree remain in  's right subtree, that they are visited after

's right subtree, that they are visited after  in the inorder traversal and all consecutively, and that the new node is visited at the very end; we conclude that the inorder traversal of the new tree is just the inorder traversal of the old tree with the new node visited at the very end. Also, since

in the inorder traversal and all consecutively, and that the new node is visited at the very end; we conclude that the inorder traversal of the new tree is just the inorder traversal of the old tree with the new node visited at the very end. Also, since  is greater than or equal to

is greater than or equal to  's label, the min-heap-ordering is preserved at

's label, the min-heap-ordering is preserved at  ; and since it is less than the label of

; and since it is less than the label of  's original right child, the min-heap-ordering is preserved here as well; so finally we conclude that the invariant is preserved.

's original right child, the min-heap-ordering is preserved here as well; so finally we conclude that the invariant is preserved.

Analysis of running time[edit]

Observe that the loop in the algorithm given above performs at most a constant number of operations per iteration, plus some variable number of operations possibly spent ascending the tree looking for a place to insert the new element. Now define the active point of the tree to be the node pointed to by the last variable. Every time we ascend the tree by one node, we decrease the active point's depth by one. Then, either Case 1, Case 2, or Case 3 happens. In Case 1, the active point's depth is left unchanged, as it goes from being the old tree's root to being the new tree's root. In Cases 2 and 3, the active point's depth increases by one, since the new node inserted is one level deeper than its parent (the previous active point), and this new node becomes the new active point. So in total the depth of the active node cannot increase more than  times, once for each iteration of the loop. This means that it cannot decrease more than

times, once for each iteration of the loop. This means that it cannot decrease more than  times in total, either, because it starts at 0 and must finish as a non-negative integer. Hence the total number of times we ascend the tree is

times in total, either, because it starts at 0 and must finish as a non-negative integer. Hence the total number of times we ascend the tree is  across all iterations of the loop, and all the other loop operations take

across all iterations of the loop, and all the other loop operations take  time total, so the construction is completed in

time total, so the construction is completed in  time. (This analysis also shows that each of the iterations of the loop uses amortized constant time.)

time. (This analysis also shows that each of the iterations of the loop uses amortized constant time.)

Using all nearest smaller values[edit]

Another construction method relies on finding all nearest smaller values of the sequence, which can be done in linear time. That is, for each element  in the sequence, we find the maximum

in the sequence, we find the maximum  such that

such that  ; this is the nearest smaller value on the left (LNSV) . We also find the minimum

; this is the nearest smaller value on the left (LNSV) . We also find the minimum  such that

such that  , and call this the nearest smaller value on the right (RNSV).

, and call this the nearest smaller value on the right (RNSV).

Theorem: Consider the unique Cartesian tree of  obtained by considering the first of equal elements to be the smallest. (In such a tree, if node with label

obtained by considering the first of equal elements to be the smallest. (In such a tree, if node with label  has a left child with label

has a left child with label  , then

, then  , and if it has a right child with label

, and if it has a right child with label  , then

, then  .) Then, given an element

.) Then, given an element  of the sequence, excepting that it is the root of the tree, it will be either the LNSV's right child or the RNSV's left child, as determined by the following rules:

of the sequence, excepting that it is the root of the tree, it will be either the LNSV's right child or the RNSV's left child, as determined by the following rules:

- If the LNSV and RNSV both exist, the larger of the two is the parent of

in the tree. In case of a tie, the RNSV wins.

in the tree. In case of a tie, the RNSV wins. - If only the LSNV exists, it is

's parent. If only the RNSV exists, it is

's parent. If only the RNSV exists, it is  's parent.

's parent. - If neither the LNSV nor the RNSV exists,

is the root.

is the root.

Proof: Suppose that node labelled  is the right child of its parent. Then its parent must be labelled

is the right child of its parent. Then its parent must be labelled  where

where  , because

, because  occurs after

occurs after  in the inorder traversal. Also,

in the inorder traversal. Also,  . Now suppose there exists

. Now suppose there exists  such that

such that  and

and  (that is,

(that is,  is not the LNSV to

is not the LNSV to  ). Then,

). Then,  must occur after

must occur after  in the inorder traversal, but before

in the inorder traversal, but before  . This is only possible if

. This is only possible if  occurs in the left subtree of the node labelled

occurs in the left subtree of the node labelled  . However, this implies that

. However, this implies that  , a contradiction. A similar analysis shows that if

, a contradiction. A similar analysis shows that if  's parent occurs after

's parent occurs after  in the sequence

in the sequence  , it must be the RNSV of

, it must be the RNSV of  . Now, pursuant to the three cases in the statement of the Theorem:

. Now, pursuant to the three cases in the statement of the Theorem:

- Since the LNSV and RNSV exist, node labelled

is not the root. Therefore this node has a parent. The foregoing analysis shows that if node labelled

is not the root. Therefore this node has a parent. The foregoing analysis shows that if node labelled  is the right child of its parent, then the parent is labelled with the LSNV, and if it is the left child, the parent is labelled with the RNSV. Suppose the former is true and

is the right child of its parent, then the parent is labelled with the LSNV, and if it is the left child, the parent is labelled with the RNSV. Suppose the former is true and  's parent is its LNSV. Then the RNSV follows

's parent is its LNSV. Then the RNSV follows  and

and  in the inorder traversal. However, it cannot be in the right subtree of node labelled

in the inorder traversal. However, it cannot be in the right subtree of node labelled  , since its value is strictly less than

, since its value is strictly less than  . It follows that it cannot be anywhere in the right subtree of

. It follows that it cannot be anywhere in the right subtree of  either (or anywhere in the subtree rooted at

either (or anywhere in the subtree rooted at  ). Now let

). Now let  be the lowest common ancestor of the nodes labelled with

be the lowest common ancestor of the nodes labelled with  and the RNSV. If

and the RNSV. If  is the node labelled with the RNSV, then node labelled

is the node labelled with the RNSV, then node labelled  is in the RNSV's left subtree, which means

is in the RNSV's left subtree, which means  (the LNSV) is strictly greater than the RNSV, as desired. If not, then since the RNSV occurs after

(the LNSV) is strictly greater than the RNSV, as desired. If not, then since the RNSV occurs after  in the inorder traversal, it must be that the RNSV is in

in the inorder traversal, it must be that the RNSV is in  's right subtree and node labelled

's right subtree and node labelled  is in

is in  's left subtree. But then

's left subtree. But then  occurs after

occurs after  in the inorder traversal and before the RNSV, and

in the inorder traversal and before the RNSV, and  's label is strictly less than

's label is strictly less than  , which contradicts the "nearestness" of the supposed RNSV (so this case can never occur at all). A similar analysis shows that if node labelled

, which contradicts the "nearestness" of the supposed RNSV (so this case can never occur at all). A similar analysis shows that if node labelled  is the left child of its parent, then the parent is labelled with the RNSV and the RNSV is greater than or equal to the LNSV, as desired.

is the left child of its parent, then the parent is labelled with the RNSV and the RNSV is greater than or equal to the LNSV, as desired. - If only the LSNV exists, then node labelled

still cannot be the root, but all the elements following

still cannot be the root, but all the elements following  are greater than or equal to it, so node labelled

are greater than or equal to it, so node labelled  cannot be the left child of any node. Therefore it is the right child of its parent, and therefore its parent is the LNSV. Likewise, if only the RNSV exists, we see that node labelled

cannot be the left child of any node. Therefore it is the right child of its parent, and therefore its parent is the LNSV. Likewise, if only the RNSV exists, we see that node labelled  is the left child of its parent, and thus the parent must be the RNSV.

is the left child of its parent, and thus the parent must be the RNSV. - If neither the LSNV nor the RNSV exists, then

is the leftmost occurrence of the minimum element in the sequence, and hence must be at the root of the tree.

is the leftmost occurrence of the minimum element in the sequence, and hence must be at the root of the tree.

Using the preceding Theorem, after computing all nearest smaller values on both sides of each element (which requires linear time), we can determine each node's parent in constant time, so we obtain overall linear time construction.

Applications[edit]

- A range minimum query on a sequence is equivalent to a lowest common ancestor query on the sequence's Cartesian tree. Hence, RMQ may be reduced to LCA using the sequence's Cartesian tree.

- The treap, a balanced binary search tree structure, is a Cartesian tree of (key,priority) pairs; it is heap-ordered according to the priority values, and an inorder traversal gives the keys in sorted order.

- The suffix tree of a string may be constructed from the suffix array and the longest common prefix array. The first step is to compute the Cartesian tree of the longest common prefix array. Details in the Suffix tree article.