Difference between revisions of "Longest increasing subsequence"

(Created page with "The '''longest increasing subsequence''' (LIS) problem is to find an increasing subsequence (either strictly or non-strictly) of maximum length, given...") |

|||

| Line 1: | Line 1: | ||

| − | The '''longest increasing subsequence''' (LIS) problem is to find an [[subsequence#Definitions|increasing subsequence]] (either strictly or non-strictly) of maximum length, given | + | The '''longest increasing subsequence''' (LIS) problem is to find an [[subsequence#Definitions|increasing subsequence]] (either strictly or non-strictly) of maximum length, given a (finite) input sequence whose elements are taken from a partially ordered set. For example, consider the sequence [9,2,6,3,1,5,0,7]. An increasing subsequence is [2,3,5,7], and, in fact, there is no longer increasing subsequence. Therefore [2,3,5,7] is a longest increasing subsequence of [9,2,6,3,1,5,0,7]. The ''longest decreasing subsequence'' can be defined analogously; it is clear that a solution to one gives a solution to the other. |

| + | |||

| + | We will focus on the non-strict case (with some parenthetical comments about the strict case). | ||

==Discussion== | ==Discussion== | ||

| Line 15: | Line 17: | ||

For example, consider the sequence [9,2,6,3,1,5,0]. Its nonempty prefixes are [9], [9,2], [9,2,6], [9,2,6,3], [9,2,6,3,1], [9,2,6,3,1,5], and [9,2,6,3,1,5,0]. For each of these, we may find an increasing subsequence that uses the last element of maximal length, for example, [9], [2], [2,6], [2,3], [1], [2,3,5], and [0], respectively. The longest of these, [2,3,5], is then also an (unrestricted) LIS of the original sequence, [9,2,6,3,1,5,0,7]. | For example, consider the sequence [9,2,6,3,1,5,0]. Its nonempty prefixes are [9], [9,2], [9,2,6], [9,2,6,3], [9,2,6,3,1], [9,2,6,3,1,5], and [9,2,6,3,1,5,0]. For each of these, we may find an increasing subsequence that uses the last element of maximal length, for example, [9], [2], [2,6], [2,3], [1], [2,3,5], and [0], respectively. The longest of these, [2,3,5], is then also an (unrestricted) LIS of the original sequence, [9,2,6,3,1,5,0,7]. | ||

| − | Denote the LIS of <math>x</math> by <math>\operatorname{LIS}(x)</math>, and denote the LIS subject to the restriction that the last element must be used as <math>\operatorname{LIS}'( | + | Denote the LIS of <math>x</math> by <math>\operatorname{LIS}(x)</math>, and denote the LIS subject to the restriction that the last element must be used as <math>\operatorname{LIS}'(i)</math>. Then we see that <math>|\operatorname{LIS}(x)| = \max_{1 \leq i \leq n} |\operatorname{LIS}'(i)|</math>. We will now focus on calculating the <math>\operatorname{LIS}'</math> values, of which there are <math>n</math> (one for each nonempty prefix of <math>x</math>). |

| + | |||

| + | ===Optimal substructure=== | ||

| + | The dynamic substructure is not too hard to see. The value for <math>\operatorname{LIS}'(i)</math> consists of either: | ||

| + | * the element <math>x_i</math> alone, which always forms an increasing subsequence by itself, or | ||

| + | * the element <math>x_i</math> tacked on to the end of an increasing subsequence ending with <math>x_j</math>, where <math>j < i</math> and <math>x_j \leq x_i</math> (for the non-strict case) or <math>x_j < x_i</math> (for the strict case). | ||

| + | |||

| + | For example, the longest increasing subsequence that ends on the last element of <math>[9,2,6,3,1]</math> is just the element <math>1</math> itself, the element at the very end. But if we consider <math>[9,2,6,3,1,5]</math>, which has as a longest increasing subsequence ending at its last element <math>[2,3,5]</math>, we see that <math>[2,3]</math> is an increasing subsequence of <math>[9,2,6,3]</math> ending at ''its'' last element and that <math>3 < 5</math> as required. | ||

| + | |||

| + | Furthermore, it is not hard to see that the increasing subsequence we are left with after removing the last element, in the second case, must itself be optimal for the element it ends on; that is, must be a possible value of <math>\operatorname{LIS}'(j)</math>. If this were not the case, then we would have a longer increasing subsequence ending on <math>x_j</math>, and then we could tack the element <math>x_i</math> on the end to obtain an increasing subsequence ending at <math>x_i</math> that is longer than the one we originally supposed was longest --- a contradiction. | ||

| + | |||

| + | Also, if we already know <math>\operatorname{LIS}'(j)</math> for all <math>j < i</math>, then one of them, when the element <math>x_i</math> is appended to the end, must give a LIS that ends at <math>x_i</math>, unless <math>\operatorname{LIS}'(x[i])</math> consists of <math>x_i</math> only. This is because if this were not the case, then whenever we were allowed to append <math>x_i</math> we would obtain a suboptimal solution --- but then removing the last element from <math>\operatorname{LIS}'(i)</math> would give a suboptimal solution to a subinstance, which we know to be impossible. | ||

| + | |||

| + | ===Overlapping subproblems=== | ||

| + | When computing <math>\operatorname{LIS}'(x[i])</math>, we might need to know the values of <math>\operatorname{LIS}'(x[j])</math> for all <math>j < i</math>. These are the shared subinstances; there are only <math>n</math> possible values in the <math>\operatorname{LIS}'</math> table in total. | ||

| + | |||

| + | ===Implementation=== | ||

| + | The optimal substructure discussed gives a very simple formula: | ||

| + | :<math>\operatorname{LIS}'(i) = (\text{the longest out of } (\{\operatorname{LIS}'(j) \mid 1 \leq j < i \wedge x_j \leq x_i\} \cup \{[\,]\}))x_i</math> | ||

| + | (In the strict case, we have <math>x_j < x_i</math> strictly.) | ||

| + | |||

| + | We can easily compute this bottom-up, since we only need to know values of smaller subinstances. | ||

| + | |||

| + | The LIS of the entire sequence <math>x</math> is then just the largest of all <math>\operatorname{LIS}'</math> values. | ||

| + | |||

| + | ===Analysis=== | ||

| + | ====Time==== | ||

| + | If only the length is desired, the above formula becomes | ||

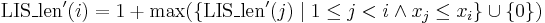

| + | :<math>\operatorname{LIS\_len}'(i) = 1 + \max (\{\operatorname{LIS\_len}'(j) \mid 1 \leq j < i \wedge x_j \leq x_i\} \cup \{0\})</math> | ||

| + | Thus, computing the <math>i^{\text{th}}</math> entry takes <math>O(i)</math> time to compute, giving <math>O(1) + O(2) + ... + O(n) = O(n^2)</math> time overall. | ||

| + | |||

| + | This bound still holds when computing the LIS itself, but since we probably wish to avoid needless copying and concatenation of sequences, a better way is to, instead of storing the <math>\operatorname{LIS}'</math> values themselves in the table, simply storing their lengths as well as making a note of the next-to-last element of the sequence (or storing a zero if there is none) so that we can backtrack to reconstruct the original sequence. | ||

| + | |||

| + | ====Memory==== | ||

| + | <math>\Theta(n)</math> memory is used. | ||

| + | |||

| + | ==A faster algorithm== | ||

| + | If the set from which the elements of <math>x</math> is taken is totally ordered, then we can do even better than this. As a matter of fact, this is usually the case, as the elements will often be integers or real numbers. This algorithm runs in <math>O(n \log n)</math> time, which is asymptotically optimal.<ref>Fredman, Michael L. (1975), "On computing the length of longest increasing subsequences", ''Discrete Mathematics'' '''11''' (1): 29–35, [http://dx.doi.org/10.1016%2F0012-365X%2875%2990103-X doi:10.1016/0012-365X(75)90103-X]</ref> | ||

| + | |||

| + | The idea behind the algorithm is that if an increasing subsequence is long, then it is useful because it might give optimal subsequences that end on later elements, and if an increasing subsequence ends on a small value, then it is useful because it is versatile concerning what may be appended to the end, but if it is short and it ends on a large value, then it is not very useful. In the <math>O(n^2)</math> algorithm already described, a lot of time is spent looking at possibly useless increasing subsequences; for example, when computing <math>\operatorname{LIS}'(i)</math>, we examine all values of <math>\operatorname{LIS}'(j)</math>, where <math>j < i</math>, even though some <math>x_j</math>'s may be larger than <math>x_i</math>. | ||

| + | |||

| + | Thus we will maintain an auxiliary array <math>a</math> indexed by LIS length. The entry <math>a[i]</math> will, at any given time, hold the least possible value for the last element of an increasing subsequence of <math>x</math> of length <math>i</math> composed of elements we have so far examined. Initially all entries of <math>a</math> will be set to <math>+\infty</math>. At the conclusion of the algorithm, the highest index at which a finite value is found is the length of the LIS. | ||

| + | |||

| + | The first thing to note is that the entries of <math>a</math> are (non-strictly) increasing. For example, consider the sequence <math>[9,2,6,3,1,5,0,7]</math>. At the conclusion of the algorithm, the array <math>a</math> will contain the values <math>[0,3,5,7,+\infty,+\infty,+\infty,+\infty]</math>. This tells us that the last element of any increasing subsequence of length 1 will be at least 0, the last element of any increasing subsequence of length 2 will be at least 3, and so on; the infinite values indicate that subsequences of those lengths do not exist. We know that <math>a</math> is increasing because it would be absurd if it were not. For example, suppose that <math>a_3 < a_2</math>, so that the least last element attainable for an increasing sequence of length 3 were less than the least last element attainable for an increasing sequence of length 2. Then we could remove the last element from the sequence of length 3 to obtain an even smaller element (possibly) at the end of an increasing sequence of length 2, which contradicts the optimality of the value we already have on file. | ||

| + | |||

| + | Now let's see how the array <math>a</math> allows us to find the length of the LIS. We consider the elements of <math>x</math> one at a time starting from <math>x_1</math>. For each element <math>x_i</math> we consider, we notice that we want to find the ''longest increasing subsequence so far discovered that ends on a value less than or equal to <math>x_i</math>''. To do this, we perform a [[binary search]] on the array <math>a</math> for the largest index <math>j</math> for which <math>a_j \leq x_i</math>, or return <math>j=0</math> if no such index exists (empty sequence). Then, we know we can obtain an increasing subsequence of length <math>j+1</math> by appending <math>x_i</math> to the end of the increasing subsequence of length <math>j</math> ending at on value <math>a_j</math>; if there is no such value then we get an increasing subsequence of length 1 by taking <math>x_i</math> by itself. What effect does this have on <math>a</math>? We know that <math>x_i < a_{j+1}</math>, and we also know that we have obtained an increasing subsequence of length <math>j+1</math> whose last element is <math>x_i</math>, which is therefore ''better'' than what we have on file for <math>a_{j+1}</math>. Therefore, we update <math>a_{j+1}</math> so that it equals <math>x_i</math>. Note that after this operation, ''<math>a</math> will still be sorted'', because <math>x_i</math> was originally less than <math>a_{j+2}</math>, so <math>a_{j+1}</math> will be less after the update, and <math>a_{j+1}</math> will still be greater than or equal to <math>a_j</math>, because <math>x_i \geq a_j</math>. After iterating through all elements of <math>x</math> and updating <math>a</math> at each step, the algorithm terminates. | ||

| + | |||

| + | ===Analysis=== | ||

| + | The reason why we expect this algorithm to be more efficient is that, by examining only <math>a</math> instead of the preceding <math>x</math>-values, all the irrelevant increasing subsequences (the ones that are both short and high at the end) are ignored, as they cannot "win" on either front and hence secure a position in <math>a</math>. | ||

| + | |||

| + | ====Time==== | ||

| + | The time taken for a binary search in the auxiliary array, of size <math>n</math>, is <math>O(\log n)</math>, and one is executed as each element of <math>x</math> is examined. Therefore this algorithm achieves the stated time bound of <math>O(n \log n)</math>. | ||

| − | == | + | ====Memory==== |

| + | Still <math>O(n)</math>, as our auxiliary array has size <math>n</math>. | ||

| + | ==References== | ||

| + | <references/> | ||

[[Category:Dynamic programming]] | [[Category:Dynamic programming]] | ||

Revision as of 00:27, 3 March 2011

The longest increasing subsequence (LIS) problem is to find an increasing subsequence (either strictly or non-strictly) of maximum length, given a (finite) input sequence whose elements are taken from a partially ordered set. For example, consider the sequence [9,2,6,3,1,5,0,7]. An increasing subsequence is [2,3,5,7], and, in fact, there is no longer increasing subsequence. Therefore [2,3,5,7] is a longest increasing subsequence of [9,2,6,3,1,5,0,7]. The longest decreasing subsequence can be defined analogously; it is clear that a solution to one gives a solution to the other.

We will focus on the non-strict case (with some parenthetical comments about the strict case).

Contents

Discussion

There are three possible ways to state the problem:

- Return all longest increasing subsequences. There may be an exponential number of these; for example, consider a sequence that starts [1,0,3,2,5,4,7,6,...]; if it has length

(even) then there are

(even) then there are  increasing subsequences of length

increasing subsequences of length  (each obtained by choosing one out of each of the pairs (1,0), (3,2), ...), and no longer increasing subsequences. Thus, this problem cannot possibly be solved efficiently.

(each obtained by choosing one out of each of the pairs (1,0), (3,2), ...), and no longer increasing subsequences. Thus, this problem cannot possibly be solved efficiently. - Return one longest increasing subsequence. This can be solved efficiently.

- Return only the maximum length that a increasing subsequence can have. This can be solved efficiently.

Reduction to LCS from non-strict case

Any increasing subsequence of a sequence is also a subsequence of the sequence sorted. For example, [9,2,6,3,1,5,0,7], when sorted, gives [0,1,2,3,5,6,7,9], and [2,3,5,7] is certainly a subsequence of this. Furthermore, if a subsequence of the original sequence is also a subsequence of the sorted sequence, then clearly it is increasing (non-strictly), and therefore an increasing subsequence of the original sequence. It follows trivially that we can compute a longest non-strictly increasing subsequence by computing a longest common subsequence of the sequence and a sorted copy of itself.

Dynamic programming solution

To compute the longest increasing subsequence contained with a given sequence ![x = [x_1, x_2, ..., x_n]](/wiki/images/math/6/2/1/621e79c67b0b6441801885ad6a9e6bbd.png) , first notice that unless

, first notice that unless  is empty, an LIS will have length at least one, and given that this is the case, it has some last element

is empty, an LIS will have length at least one, and given that this is the case, it has some last element  . Denote the (non-empty) prefixes of

. Denote the (non-empty) prefixes of  by

by ![x[1] = [x_1], x[2] = [x_1, x_2], ..., x[n] = x](/wiki/images/math/2/3/9/2396e4d2839ee5d54d6aa9dfc7b04487.png) . Then, an LIS that ends with element

. Then, an LIS that ends with element  has the property that it is an LIS that uses the last element of

has the property that it is an LIS that uses the last element of ![x[i]](/wiki/images/math/e/c/a/eca9bd72b911b96661e70a7bf689d0ca.png) . Thus, for each of the prefixes

. Thus, for each of the prefixes ![x[1], x[2], ..., x[n]](/wiki/images/math/4/1/6/416c6a38c37b23141c0aae1eef870e46.png) , we will determine an increasing subsequence that contains the last element of this prefix sequence such that no longer increasing subsequence has this property. Then, one of these must be a LIS for the original sequence.

, we will determine an increasing subsequence that contains the last element of this prefix sequence such that no longer increasing subsequence has this property. Then, one of these must be a LIS for the original sequence.

For example, consider the sequence [9,2,6,3,1,5,0]. Its nonempty prefixes are [9], [9,2], [9,2,6], [9,2,6,3], [9,2,6,3,1], [9,2,6,3,1,5], and [9,2,6,3,1,5,0]. For each of these, we may find an increasing subsequence that uses the last element of maximal length, for example, [9], [2], [2,6], [2,3], [1], [2,3,5], and [0], respectively. The longest of these, [2,3,5], is then also an (unrestricted) LIS of the original sequence, [9,2,6,3,1,5,0,7].

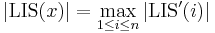

Denote the LIS of  by

by  , and denote the LIS subject to the restriction that the last element must be used as

, and denote the LIS subject to the restriction that the last element must be used as  . Then we see that

. Then we see that  . We will now focus on calculating the

. We will now focus on calculating the  values, of which there are

values, of which there are  (one for each nonempty prefix of

(one for each nonempty prefix of  ).

).

Optimal substructure

The dynamic substructure is not too hard to see. The value for  consists of either:

consists of either:

- the element

alone, which always forms an increasing subsequence by itself, or

alone, which always forms an increasing subsequence by itself, or - the element

tacked on to the end of an increasing subsequence ending with

tacked on to the end of an increasing subsequence ending with  , where

, where  and

and  (for the non-strict case) or

(for the non-strict case) or  (for the strict case).

(for the strict case).

For example, the longest increasing subsequence that ends on the last element of ![[9,2,6,3,1]](/wiki/images/math/2/8/2/2824ba6a694a1cbb33bcbc4dd0b9845a.png) is just the element

is just the element  itself, the element at the very end. But if we consider

itself, the element at the very end. But if we consider ![[9,2,6,3,1,5]](/wiki/images/math/3/3/9/33945c6653ad9b9190b8293b38741303.png) , which has as a longest increasing subsequence ending at its last element

, which has as a longest increasing subsequence ending at its last element ![[2,3,5]](/wiki/images/math/3/3/c/33cd7433211350933b73bc316943e6b8.png) , we see that

, we see that ![[2,3]](/wiki/images/math/d/5/1/d5138fec13c27bb6c645b29cdfa97a84.png) is an increasing subsequence of

is an increasing subsequence of ![[9,2,6,3]](/wiki/images/math/8/4/b/84b2b8698c67105233fed595d56323d7.png) ending at its last element and that

ending at its last element and that  as required.

as required.

Furthermore, it is not hard to see that the increasing subsequence we are left with after removing the last element, in the second case, must itself be optimal for the element it ends on; that is, must be a possible value of  . If this were not the case, then we would have a longer increasing subsequence ending on

. If this were not the case, then we would have a longer increasing subsequence ending on  , and then we could tack the element

, and then we could tack the element  on the end to obtain an increasing subsequence ending at

on the end to obtain an increasing subsequence ending at  that is longer than the one we originally supposed was longest --- a contradiction.

that is longer than the one we originally supposed was longest --- a contradiction.

Also, if we already know  for all

for all  , then one of them, when the element

, then one of them, when the element  is appended to the end, must give a LIS that ends at

is appended to the end, must give a LIS that ends at  , unless

, unless ![\operatorname{LIS}'(x[i])](/wiki/images/math/a/0/9/a09ea6dcfead478b0b4ac6bb0a096a3b.png) consists of

consists of  only. This is because if this were not the case, then whenever we were allowed to append

only. This is because if this were not the case, then whenever we were allowed to append  we would obtain a suboptimal solution --- but then removing the last element from

we would obtain a suboptimal solution --- but then removing the last element from  would give a suboptimal solution to a subinstance, which we know to be impossible.

would give a suboptimal solution to a subinstance, which we know to be impossible.

Overlapping subproblems

When computing ![\operatorname{LIS}'(x[i])](/wiki/images/math/a/0/9/a09ea6dcfead478b0b4ac6bb0a096a3b.png) , we might need to know the values of

, we might need to know the values of ![\operatorname{LIS}'(x[j])](/wiki/images/math/2/6/2/2622812a25f0ba36ff5cc6071cf50fa7.png) for all

for all  . These are the shared subinstances; there are only

. These are the shared subinstances; there are only  possible values in the

possible values in the  table in total.

table in total.

Implementation

The optimal substructure discussed gives a very simple formula:

(In the strict case, we have  strictly.)

strictly.)

We can easily compute this bottom-up, since we only need to know values of smaller subinstances.

The LIS of the entire sequence  is then just the largest of all

is then just the largest of all  values.

values.

Analysis

Time

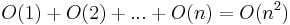

If only the length is desired, the above formula becomes

Thus, computing the  entry takes

entry takes  time to compute, giving

time to compute, giving  time overall.

time overall.

This bound still holds when computing the LIS itself, but since we probably wish to avoid needless copying and concatenation of sequences, a better way is to, instead of storing the  values themselves in the table, simply storing their lengths as well as making a note of the next-to-last element of the sequence (or storing a zero if there is none) so that we can backtrack to reconstruct the original sequence.

values themselves in the table, simply storing their lengths as well as making a note of the next-to-last element of the sequence (or storing a zero if there is none) so that we can backtrack to reconstruct the original sequence.

Memory

memory is used.

memory is used.

A faster algorithm

If the set from which the elements of  is taken is totally ordered, then we can do even better than this. As a matter of fact, this is usually the case, as the elements will often be integers or real numbers. This algorithm runs in

is taken is totally ordered, then we can do even better than this. As a matter of fact, this is usually the case, as the elements will often be integers or real numbers. This algorithm runs in  time, which is asymptotically optimal.[1]

time, which is asymptotically optimal.[1]

The idea behind the algorithm is that if an increasing subsequence is long, then it is useful because it might give optimal subsequences that end on later elements, and if an increasing subsequence ends on a small value, then it is useful because it is versatile concerning what may be appended to the end, but if it is short and it ends on a large value, then it is not very useful. In the  algorithm already described, a lot of time is spent looking at possibly useless increasing subsequences; for example, when computing

algorithm already described, a lot of time is spent looking at possibly useless increasing subsequences; for example, when computing  , we examine all values of

, we examine all values of  , where

, where  , even though some

, even though some  's may be larger than

's may be larger than  .

.

Thus we will maintain an auxiliary array  indexed by LIS length. The entry

indexed by LIS length. The entry ![a[i]](/wiki/images/math/5/9/5/595711c58954cfc2eb727cba561e3512.png) will, at any given time, hold the least possible value for the last element of an increasing subsequence of

will, at any given time, hold the least possible value for the last element of an increasing subsequence of  of length

of length  composed of elements we have so far examined. Initially all entries of

composed of elements we have so far examined. Initially all entries of  will be set to

will be set to  . At the conclusion of the algorithm, the highest index at which a finite value is found is the length of the LIS.

. At the conclusion of the algorithm, the highest index at which a finite value is found is the length of the LIS.

The first thing to note is that the entries of  are (non-strictly) increasing. For example, consider the sequence

are (non-strictly) increasing. For example, consider the sequence ![[9,2,6,3,1,5,0,7]](/wiki/images/math/a/3/e/a3e4109270a19cac82c7e91bec7564e4.png) . At the conclusion of the algorithm, the array

. At the conclusion of the algorithm, the array  will contain the values

will contain the values ![[0,3,5,7,+\infty,+\infty,+\infty,+\infty]](/wiki/images/math/e/d/f/edf8093f85a066c41d1b2a9844332692.png) . This tells us that the last element of any increasing subsequence of length 1 will be at least 0, the last element of any increasing subsequence of length 2 will be at least 3, and so on; the infinite values indicate that subsequences of those lengths do not exist. We know that

. This tells us that the last element of any increasing subsequence of length 1 will be at least 0, the last element of any increasing subsequence of length 2 will be at least 3, and so on; the infinite values indicate that subsequences of those lengths do not exist. We know that  is increasing because it would be absurd if it were not. For example, suppose that

is increasing because it would be absurd if it were not. For example, suppose that  , so that the least last element attainable for an increasing sequence of length 3 were less than the least last element attainable for an increasing sequence of length 2. Then we could remove the last element from the sequence of length 3 to obtain an even smaller element (possibly) at the end of an increasing sequence of length 2, which contradicts the optimality of the value we already have on file.

, so that the least last element attainable for an increasing sequence of length 3 were less than the least last element attainable for an increasing sequence of length 2. Then we could remove the last element from the sequence of length 3 to obtain an even smaller element (possibly) at the end of an increasing sequence of length 2, which contradicts the optimality of the value we already have on file.

Now let's see how the array  allows us to find the length of the LIS. We consider the elements of

allows us to find the length of the LIS. We consider the elements of  one at a time starting from

one at a time starting from  . For each element

. For each element  we consider, we notice that we want to find the longest increasing subsequence so far discovered that ends on a value less than or equal to

we consider, we notice that we want to find the longest increasing subsequence so far discovered that ends on a value less than or equal to  . To do this, we perform a binary search on the array

. To do this, we perform a binary search on the array  for the largest index

for the largest index  for which

for which  , or return

, or return  if no such index exists (empty sequence). Then, we know we can obtain an increasing subsequence of length

if no such index exists (empty sequence). Then, we know we can obtain an increasing subsequence of length  by appending

by appending  to the end of the increasing subsequence of length

to the end of the increasing subsequence of length  ending at on value

ending at on value  ; if there is no such value then we get an increasing subsequence of length 1 by taking

; if there is no such value then we get an increasing subsequence of length 1 by taking  by itself. What effect does this have on

by itself. What effect does this have on  ? We know that

? We know that  , and we also know that we have obtained an increasing subsequence of length

, and we also know that we have obtained an increasing subsequence of length  whose last element is

whose last element is  , which is therefore better than what we have on file for

, which is therefore better than what we have on file for  . Therefore, we update

. Therefore, we update  so that it equals

so that it equals  . Note that after this operation,

. Note that after this operation,  will still be sorted, because

will still be sorted, because  was originally less than

was originally less than  , so

, so  will be less after the update, and

will be less after the update, and  will still be greater than or equal to

will still be greater than or equal to  , because

, because  . After iterating through all elements of

. After iterating through all elements of  and updating

and updating  at each step, the algorithm terminates.

at each step, the algorithm terminates.

Analysis

The reason why we expect this algorithm to be more efficient is that, by examining only  instead of the preceding

instead of the preceding  -values, all the irrelevant increasing subsequences (the ones that are both short and high at the end) are ignored, as they cannot "win" on either front and hence secure a position in

-values, all the irrelevant increasing subsequences (the ones that are both short and high at the end) are ignored, as they cannot "win" on either front and hence secure a position in  .

.

Time

The time taken for a binary search in the auxiliary array, of size  , is

, is  , and one is executed as each element of

, and one is executed as each element of  is examined. Therefore this algorithm achieves the stated time bound of

is examined. Therefore this algorithm achieves the stated time bound of  .

.

Memory

Still  , as our auxiliary array has size

, as our auxiliary array has size  .

.

References

- ↑ Fredman, Michael L. (1975), "On computing the length of longest increasing subsequences", Discrete Mathematics 11 (1): 29–35, doi:10.1016/0012-365X(75)90103-X

![\operatorname{LIS}'(i) = (\text{the longest out of } (\{\operatorname{LIS}'(j) \mid 1 \leq j < i \wedge x_j \leq x_i\} \cup \{[\,]\}))x_i](/wiki/images/math/e/5/5/e55474191f01c6dcdf11484eb608b408.png)